Demoman

Jul 12, 09:36 PM

This thread is getting too funny. Apple has been so far behind on power these past few years and now we get the chance to use Conroe, and suddenly that's not good enough for the Mac snobs. Conroe is an extremely fast chip (especially compared to G5), so I don't get why some people think it's a bad choice for the pro-line up. Sure, it can't do smp, but not everyone needs or want to pay for quad processing.

So, aside from the ability to do multiple processing, what advantages does Woodcrest have that make it mandatory to go in the pro-line? How much "faster" is it going to be over the Conroe? It's my understanding that they are identical in that respect.

SW engineers usually optimize their systems with expectations of the environment they will run in. Pro-level applications often run much better in systems that use SMP, but not all. Sometimes it is better to pipeline a few processes at high speed, rather than do a lot of task swapping. Most of Apples core customer's application seem to benefit from SMP. So, that is what they are going to expect from Pro-level hardware.

So, aside from the ability to do multiple processing, what advantages does Woodcrest have that make it mandatory to go in the pro-line? How much "faster" is it going to be over the Conroe? It's my understanding that they are identical in that respect.

SW engineers usually optimize their systems with expectations of the environment they will run in. Pro-level applications often run much better in systems that use SMP, but not all. Sometimes it is better to pipeline a few processes at high speed, rather than do a lot of task swapping. Most of Apples core customer's application seem to benefit from SMP. So, that is what they are going to expect from Pro-level hardware.

Cabbit

Apr 15, 12:47 PM

Not if you believe HBO! All Roman women were raging lesbians (or at least bi-sexual).

The hunky men, not so much� *sigh*

:p

A married woman of high standing was not allowed, but lower classes were. A man or woman could have a man, woman, child or animal if they wished.

The hunky men, not so much� *sigh*

:p

A married woman of high standing was not allowed, but lower classes were. A man or woman could have a man, woman, child or animal if they wished.

.Andy

Apr 23, 03:53 PM

http://carm.org/entropy-and-causality-used-proof-gods-existence

Of course this is a Christian Apologetics site so necessarily biased.

This "proof" is full of the most hilariously appalling non-sequiturs :D!

Of course this is a Christian Apologetics site so necessarily biased.

This "proof" is full of the most hilariously appalling non-sequiturs :D!

AP_piano295

Apr 26, 01:27 PM

Not all religion is about the belief in God. In Buddhism (http://http://buddhismbeliefs.org/), it doesn't matter one way or the other if God exists or not. In many ways, my thinking follows the Buddhist way. By it's very definition (http://http://dictionary.reference.com/browse/religion), atheism can be considered a religion. #2 a specific fundamental set of beliefs and practices generally agreed upon by a number of persons or sects: the Christian religion; the Buddhist religion.

Atheist believe in the non-existence of God; some as fervently as Christians believe in one.

As for trying to prove or disprove the existence of God. Many men and women, much smarter and better qualified than me, have tried. All have failed. I don't bother with the impossible.;)

I'm getting tired of shooting down this massive and prevalent mis-conception over and over again so I'll just copy paste my post from the "why are there so many atheists" thread.

For a start atheism (ass I see it) is not a belief system, I don't even like to use the term atheist because it grants religion(s) a much higher status than I think it deserves. The term atheism gives the impression that I have purposefully decided NOT to believe in god or religion

I have not chosen not to believe in god or god(s). I just have no reason to believe that they exist because I have seen nothing which suggests their existence.

I don't claim to understand how the universe/matter/energy/life came to be, but the ancient Greeks didn't understand lighting. The fact that they didn't understand lighting made Zeus no more real and electricity no less real. The fact that I do not understand abiogenesis (the formation of living matter from non living matter) does not mean that it is beyond understanding.

The fact that there is much currently beyond the scope of human understanding in no way suggests the existence of god.

In much the same way that one's inability to see through a closed door doesn't suggest that the room beyond is filled with leprechauns.

A lack of information does not arbitrarily suggest the nature of the lacking knowledge. Any speculation which isn't based upon available information is simply meaningless speculation, nothing more.

Atheism is no more a religion than failing to believe in leprechauns is a religion..:rolleyes:

Atheist believe in the non-existence of God; some as fervently as Christians believe in one.

As for trying to prove or disprove the existence of God. Many men and women, much smarter and better qualified than me, have tried. All have failed. I don't bother with the impossible.;)

I'm getting tired of shooting down this massive and prevalent mis-conception over and over again so I'll just copy paste my post from the "why are there so many atheists" thread.

For a start atheism (ass I see it) is not a belief system, I don't even like to use the term atheist because it grants religion(s) a much higher status than I think it deserves. The term atheism gives the impression that I have purposefully decided NOT to believe in god or religion

I have not chosen not to believe in god or god(s). I just have no reason to believe that they exist because I have seen nothing which suggests their existence.

I don't claim to understand how the universe/matter/energy/life came to be, but the ancient Greeks didn't understand lighting. The fact that they didn't understand lighting made Zeus no more real and electricity no less real. The fact that I do not understand abiogenesis (the formation of living matter from non living matter) does not mean that it is beyond understanding.

The fact that there is much currently beyond the scope of human understanding in no way suggests the existence of god.

In much the same way that one's inability to see through a closed door doesn't suggest that the room beyond is filled with leprechauns.

A lack of information does not arbitrarily suggest the nature of the lacking knowledge. Any speculation which isn't based upon available information is simply meaningless speculation, nothing more.

Atheism is no more a religion than failing to believe in leprechauns is a religion..:rolleyes:

faroZ06

May 2, 06:26 PM

Switching off or turning down UAC in Windows also equally impacts the strength of MIC (Windows sandboxing mechanism) because it functions based on inherited permissions. Unix DAC in Mac OS X functions via inherited permissions but MAC (mandatory access controls -> OS X sandbox) does not. Windows does not have a sandbox like OS X.

UAC, by default, does not use a unique identifier (password) so it is more susceptible to attacks the rely on spoofing prompts that appear to be unrelated to UAC to steal authentication. If a password is attached to authentication, these spoofed prompts fail to work.

Having a password associated with permissions has other benefits as well.

If "Open safe files after downloading" is turned on, it will both unarchive the zip file and launch the installer. Installers are marked as safe to launch because require authentication to complete installation.

No harm can be done from just launching the installer. But, you are correct in that code is being executed in user space.

Code run in user space is used to achieve privilege escalation via exploitation or social engineering (trick user to authenticate -> as in this malware). There is very little that can be done beyond prank style attacks with only user level access. System level access is required for usefully dangerous malware install, such as keyloggers that can log protected passwords. This is why there is little malware for Mac OS X. Achieving system level access to Windows via exploitation is much easier.

Webkit2 will further reduce the possibility of even achieving user level access.

The article suggested that the installer completed itself without authentication. I don't see how that is possible unless you are using the root account or something. It would give sudo access, but even still you'd get SOME dialog box :confused:

UAC, by default, does not use a unique identifier (password) so it is more susceptible to attacks the rely on spoofing prompts that appear to be unrelated to UAC to steal authentication. If a password is attached to authentication, these spoofed prompts fail to work.

Having a password associated with permissions has other benefits as well.

If "Open safe files after downloading" is turned on, it will both unarchive the zip file and launch the installer. Installers are marked as safe to launch because require authentication to complete installation.

No harm can be done from just launching the installer. But, you are correct in that code is being executed in user space.

Code run in user space is used to achieve privilege escalation via exploitation or social engineering (trick user to authenticate -> as in this malware). There is very little that can be done beyond prank style attacks with only user level access. System level access is required for usefully dangerous malware install, such as keyloggers that can log protected passwords. This is why there is little malware for Mac OS X. Achieving system level access to Windows via exploitation is much easier.

Webkit2 will further reduce the possibility of even achieving user level access.

The article suggested that the installer completed itself without authentication. I don't see how that is possible unless you are using the root account or something. It would give sudo access, but even still you'd get SOME dialog box :confused:

alex_ant

Oct 9, 08:08 PM

Originally posted by gopher

Maybe we have, but nobody has provided compelling evidence to the contrary.

You must be joking. Reference after reference has been provided and you simply break from the thread, only to re-emerge in another thread later. This has happened at least twice now that I can remember.

The Mac hardware is capable of 18 billion floating calculations a second. Whether the software takes advantage of it that's another issue entirely.

My arse is capable of making 8-pound turds, but whether or not I eat enough baked beans to take advantage of that is another issue entirely. In other words,

18 gigaflops = about as likely as an 8-pound turd in my toilet. Possible, yes (under the most severely ridiculous condtions). Real-world, no.

If someone is going to argue that Macs don't have good floating point performance, just look at the specs.

For the - what is this, fifth? - time now: AltiVec is incapable of double precision, and is capable of accelerating only that code which is written specifically to take advantage of it. Which is some of it. Which means any high "gigaflops" performance quotes deserve large asterisks next to them.

If they really want good performance and aren't getting it they need to contact their favorite developer to work with the specs and Apple's developer relations.

Exactly, this is the whole problem - if a developer wants good performance and can't get it, they have to jump through hoops and waste time and money that they shouldn't have to waste.

Apple provides the hardware, it is up to developer companies to utilize the hardware the best way they can. If they can't utilize Apple's hardware to its most efficient mode, then they should find better developers.

Way to encourage Mac development, huh? "Hey guys, come develop for our platform! We've got a 3.5% national desktop market share and a 2% world desktop market share, and we have an uncertain future! We want YOU to spend time and money porting your software to OUR platform, and on top of that, we want YOU to go the extra mile to waste time and money that you shouldn't have to waste just to ensure that your code doesn't run like a dog on our ancient wack-job hack of a processor!"

If you are going to complain that Apple doesn't have good floating point performance, don't use a PC biased spec like Specfp.

"PC biased spec like SPECfp?" Yes, the reason PPC does so poorly in SPEC is because SPECfp is biased towards Intel, AMD, Sun, MIPS, HP/Compaq, and IBM (all of whose chips blow the G4 out of the water, and not only the x86 chips - the workstation and server chips too, literally ALL of them), and Apple's miserable performance is a conspiracy engineered by The Man, right?

Go by actual floating point calculations a second.

Why? FLOPS is as dumb a benchmark as MIPS. That's the reason cross-platform benchmarks exist.

Nobody has shown anything to say that PCs can do more floating point calculations a second. And until someone does I stand by my claim.

An Athlon 1700+ scores about what, 575 in SPECfp2000 (depending on the system)? Results for the 1.25GHz G4 are unavailable (because Apple is ashamed to publish them), but the 1GHz does about 175. Let's be very gracious and assume the new GCC has got the 1.25GHz G4 up to 300. That's STILL terrible. So how about an accurate summary of the G4's floating point performance:

On the whole, poor.******

* Very strong on applications well-suited to AltiVec and optimized to take advantage of it.

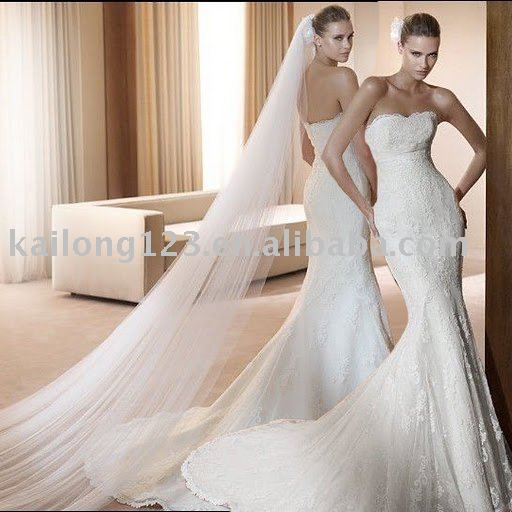

2011 new lace wedding dress

sleeves lace wedding dress

Dresses WM-0425. Strapless

2011 Valisere Multilayer Lace

wedding dresses 2011 lace.

Arabic. WD-122 2011 Allure

Arabic. EMCN1352 2011 Sexy

wedding dresses 2011 lace.

Lace Wedding Dress(China

2011 lace high neck wedding

Dresses WL-0160. Strapless

white lace wedding dress 2011

lace wedding dresses 2011,

Reacent Post

Maybe we have, but nobody has provided compelling evidence to the contrary.

You must be joking. Reference after reference has been provided and you simply break from the thread, only to re-emerge in another thread later. This has happened at least twice now that I can remember.

The Mac hardware is capable of 18 billion floating calculations a second. Whether the software takes advantage of it that's another issue entirely.

My arse is capable of making 8-pound turds, but whether or not I eat enough baked beans to take advantage of that is another issue entirely. In other words,

18 gigaflops = about as likely as an 8-pound turd in my toilet. Possible, yes (under the most severely ridiculous condtions). Real-world, no.

If someone is going to argue that Macs don't have good floating point performance, just look at the specs.

For the - what is this, fifth? - time now: AltiVec is incapable of double precision, and is capable of accelerating only that code which is written specifically to take advantage of it. Which is some of it. Which means any high "gigaflops" performance quotes deserve large asterisks next to them.

If they really want good performance and aren't getting it they need to contact their favorite developer to work with the specs and Apple's developer relations.

Exactly, this is the whole problem - if a developer wants good performance and can't get it, they have to jump through hoops and waste time and money that they shouldn't have to waste.

Apple provides the hardware, it is up to developer companies to utilize the hardware the best way they can. If they can't utilize Apple's hardware to its most efficient mode, then they should find better developers.

Way to encourage Mac development, huh? "Hey guys, come develop for our platform! We've got a 3.5% national desktop market share and a 2% world desktop market share, and we have an uncertain future! We want YOU to spend time and money porting your software to OUR platform, and on top of that, we want YOU to go the extra mile to waste time and money that you shouldn't have to waste just to ensure that your code doesn't run like a dog on our ancient wack-job hack of a processor!"

If you are going to complain that Apple doesn't have good floating point performance, don't use a PC biased spec like Specfp.

"PC biased spec like SPECfp?" Yes, the reason PPC does so poorly in SPEC is because SPECfp is biased towards Intel, AMD, Sun, MIPS, HP/Compaq, and IBM (all of whose chips blow the G4 out of the water, and not only the x86 chips - the workstation and server chips too, literally ALL of them), and Apple's miserable performance is a conspiracy engineered by The Man, right?

Go by actual floating point calculations a second.

Why? FLOPS is as dumb a benchmark as MIPS. That's the reason cross-platform benchmarks exist.

Nobody has shown anything to say that PCs can do more floating point calculations a second. And until someone does I stand by my claim.

An Athlon 1700+ scores about what, 575 in SPECfp2000 (depending on the system)? Results for the 1.25GHz G4 are unavailable (because Apple is ashamed to publish them), but the 1GHz does about 175. Let's be very gracious and assume the new GCC has got the 1.25GHz G4 up to 300. That's STILL terrible. So how about an accurate summary of the G4's floating point performance:

On the whole, poor.******

* Very strong on applications well-suited to AltiVec and optimized to take advantage of it.

blahblah100

Apr 28, 02:57 PM

Ever heard of the Mac Mini???

The day Apple starts making Netbook quality computers I will start hating Apple.

How good is a cheap computer when it works like crap? I know many people who bought cheap PCs and laptops, and when I tried to used them, it was very annoying how slow these were.

Wait, is that the $700 computer that has a Core 2 Duo and no keyboard/mouse? :rolleyes:

The day Apple starts making Netbook quality computers I will start hating Apple.

How good is a cheap computer when it works like crap? I know many people who bought cheap PCs and laptops, and when I tried to used them, it was very annoying how slow these were.

Wait, is that the $700 computer that has a Core 2 Duo and no keyboard/mouse? :rolleyes:

Project

Sep 20, 01:55 AM

I hate to be the first to post a negative but here it is. I don't think this will be overly expensive, but I also think we will be underwhelmed with it's features. Wireless is not that important to me. There are many wires back there already. It sounds like it will not have HDMI or TiVo features, and it will play movies out of iTunes, which screams to me that it will only play .mp4 and .m4v files much like my 5G iPod. If it cannot browse my my mac or firedrive, cannot stream from them, cannot play .avi, .wmw, .rm or VCD, then it will not replace my 4 year old xbox. Which itself has a 120Gig drive and a remote. Unless we are all sorely mistaken about what iTV will end up being, and it ends up adding these features (as someone above me noted, hoping Apple would read this forum) I will wait. Honestly, I am far more excited over the prospect of the MacBook Pros hopefully switching to Core 2 Duos before year end. Then I will have a much more powerful machine slung to my firedrive, router, xbox and tv. :)

Its Front Row. Which can play whatever Quicktime can play. Which means it can play avi, wmv etc. Just install the codecs.

Its Front Row. Which can play whatever Quicktime can play. Which means it can play avi, wmv etc. Just install the codecs.

mac jones

Mar 12, 05:13 AM

Wirelessly posted (Mozilla/5.0 (iPhone; U; CPU iPhone OS 4_1 like Mac OS X; en-us) AppleWebKit/532.9 (KHTML, like Gecko) Version/4.0.5 Mobile/8B117 Safari/6531.22.7)

Not once have I said anything is safe. Not once have I said there is nothing to worry about; just the opposite--it's a serious situation and could get worse.

All I've said is we don't have enough information to make much of an assessment and to not panic.

With all due respect, somebody who doesn't even realize hydrogen is explosive isn't really in a position to tell someone holding two degrees in the field and speaking a good amount of the local language that he's de facto right and I'm de facto wrong.

Are they %100 up front, or are we going to have to wait for some potentially very bad news?

Certainly panic is not an option, ever. But I have little faith in government officials at the beginnings of crisis.

Not once have I said anything is safe. Not once have I said there is nothing to worry about; just the opposite--it's a serious situation and could get worse.

All I've said is we don't have enough information to make much of an assessment and to not panic.

With all due respect, somebody who doesn't even realize hydrogen is explosive isn't really in a position to tell someone holding two degrees in the field and speaking a good amount of the local language that he's de facto right and I'm de facto wrong.

Are they %100 up front, or are we going to have to wait for some potentially very bad news?

Certainly panic is not an option, ever. But I have little faith in government officials at the beginnings of crisis.

Doctor Q

Mar 18, 03:54 PM

I'm not pleased with this development, because Apple's DRM is necessary to maintain the compromise they made with the record labels and allow the iTunes Music Store to exist in the first place. If the labels gets the jitters about how well Apple is controlling distribution, that threatens a good part of our "supply" of music, even though I wouldn't expect a large percentage of mainstream customers to actually use a program like PyMusique.

Will Apple be able to teach the iTunes Music Store to distinguish the real iTunes client from PyMusique with software changes only on the server side? If not, I imagine that only an iTunes update (which people would have to install) could stop the program from working.

Suppose iTunes is updated to use a new "secret handshake" with the iTunes Music Store in order to stop other clients from spoofing iTunes. Will iTunes have any way to distinguish tunes previously purchased through PyMusique from tunes acquired from other sources, i.e., ripped from CDs? Perhaps the tags identify them as coming from iTMS and iTunes could apply DRM after the fact. Then again, tags can be removed.

Will Apple be able to teach the iTunes Music Store to distinguish the real iTunes client from PyMusique with software changes only on the server side? If not, I imagine that only an iTunes update (which people would have to install) could stop the program from working.

Suppose iTunes is updated to use a new "secret handshake" with the iTunes Music Store in order to stop other clients from spoofing iTunes. Will iTunes have any way to distinguish tunes previously purchased through PyMusique from tunes acquired from other sources, i.e., ripped from CDs? Perhaps the tags identify them as coming from iTMS and iTunes could apply DRM after the fact. Then again, tags can be removed.

iMikeT

Aug 29, 11:01 AM

Why do these "tree-huggers" have to interfere with business?

Apple does what they can to have more "enviornmentally-friendly" ways of processing their products. But 4th worst?

Apple does what they can to have more "enviornmentally-friendly" ways of processing their products. But 4th worst?

Gelfin

Apr 24, 03:03 PM

In answer to the OP's question, I have long harbored the suspicion (without any clear idea how to test it) that human beings have evolved their penchant for accepting nonsense. On the face of it, accepting that which does not correspond with reality is a very costly behavior. Animals that believe they need to sacrifice part of their food supply should be that much less likely to survive than those without that belief.

My hunch, however, is that the willingness to play along with certain kinds of nonsense games, including religion and other ritualized activities, is a social bonding mechanism in humans so deeply ingrained that it is difficult for us to step outside ourselves and recognize it for a game. One's willingness to play along with the rituals of a culture signifies that his need to be a part of the community is stronger than his need for rational justification. Consenting to accept a manufactured truth is an act of submission. It generates social cohesion and establishes shibboleths. In a way it is a constant background radiation of codependence and enablement permeating human existence.

If I go way too far out on this particular limb, I actually suspect that the ability to prioritize rational justification over social submission is a more recent development than we realize, and that this development is still competing with the old instincts for social cohesion. Perhaps this is the reason that atheists and skeptics are typically considered more objectionable than those with differing religious or supernatural beliefs. Playing the game under slightly different rules seems less dangerous than refusing to play at all.

Think of the undertones of the intuitive stereotype many people have of skeptics: many people automatically imagine a sort of bristly, unfriendly loner who isn't really happy and is always trying to make other people unhappy too. There is really no factual basis for this caricature, and yet it is almost universal. On this account, when we become adults we do not stop playing games of make-believe. Instead we just start taking our games of make-believe very seriously, and our intuitive sense is that someone who rejects our games is rejecting us. Such a person feels untrustworthy in a way we would find hard to justify.

Religions are hardly the only source of this sort of game. I suspect they are everywhere, often too subtle to notice, but religions are by far the largest, oldest, most obtrusive example.

My hunch, however, is that the willingness to play along with certain kinds of nonsense games, including religion and other ritualized activities, is a social bonding mechanism in humans so deeply ingrained that it is difficult for us to step outside ourselves and recognize it for a game. One's willingness to play along with the rituals of a culture signifies that his need to be a part of the community is stronger than his need for rational justification. Consenting to accept a manufactured truth is an act of submission. It generates social cohesion and establishes shibboleths. In a way it is a constant background radiation of codependence and enablement permeating human existence.

If I go way too far out on this particular limb, I actually suspect that the ability to prioritize rational justification over social submission is a more recent development than we realize, and that this development is still competing with the old instincts for social cohesion. Perhaps this is the reason that atheists and skeptics are typically considered more objectionable than those with differing religious or supernatural beliefs. Playing the game under slightly different rules seems less dangerous than refusing to play at all.

Think of the undertones of the intuitive stereotype many people have of skeptics: many people automatically imagine a sort of bristly, unfriendly loner who isn't really happy and is always trying to make other people unhappy too. There is really no factual basis for this caricature, and yet it is almost universal. On this account, when we become adults we do not stop playing games of make-believe. Instead we just start taking our games of make-believe very seriously, and our intuitive sense is that someone who rejects our games is rejecting us. Such a person feels untrustworthy in a way we would find hard to justify.

Religions are hardly the only source of this sort of game. I suspect they are everywhere, often too subtle to notice, but religions are by far the largest, oldest, most obtrusive example.

PghLondon

Apr 28, 01:40 PM

Really?

So I can take an iPad out of the box and use it without ever involving a "pc?"

If so, I must have a defective iPad since mine was completely useless until I connected it to iTunes ON A PC... :eek:

As has been stated (literally) hundreds of times:

Any Apple retailer will do your initial sync, free of charge.

So I can take an iPad out of the box and use it without ever involving a "pc?"

If so, I must have a defective iPad since mine was completely useless until I connected it to iTunes ON A PC... :eek:

As has been stated (literally) hundreds of times:

Any Apple retailer will do your initial sync, free of charge.

gnasher729

Apr 9, 10:58 AM

Poaching suggests illegal, secret, stealing or other misadventure that is underhanded and sneaky.

From what I've read so far, and I'd be glad for someone to show me what I've missed, Apple had the job positions already advertised and for all we know these individuals, realizing their companies were sliding, applied to - and were received by - apple which replied with open arms. Does anyone have evidence to the contrary? Would that be poaching? Is this forum, like some others, doing headline greed?

There was a bit of trouble a while ago because some major companies (I think Apple, Google, and someone else) apparently had a "no poaching" agreement, agreeing that they wouldn't make job offers to people employed by the other company. That is considered bad, because it means someone say employed by Google for $100,000 a year can't get a job offer from Apple for $110,000 a year, so salaries are kept down. While companies may not like poaching, employees like it.

And what makes you say "these individuals, realizing their companies were sliding..." ? The company I work for is doing very well, but if someone else offered me a much higher salary, or better career opportunities, or much better working conditions, or a much more interesting job, why wouldn't I consider that?

From what I've read so far, and I'd be glad for someone to show me what I've missed, Apple had the job positions already advertised and for all we know these individuals, realizing their companies were sliding, applied to - and were received by - apple which replied with open arms. Does anyone have evidence to the contrary? Would that be poaching? Is this forum, like some others, doing headline greed?

There was a bit of trouble a while ago because some major companies (I think Apple, Google, and someone else) apparently had a "no poaching" agreement, agreeing that they wouldn't make job offers to people employed by the other company. That is considered bad, because it means someone say employed by Google for $100,000 a year can't get a job offer from Apple for $110,000 a year, so salaries are kept down. While companies may not like poaching, employees like it.

And what makes you say "these individuals, realizing their companies were sliding..." ? The company I work for is doing very well, but if someone else offered me a much higher salary, or better career opportunities, or much better working conditions, or a much more interesting job, why wouldn't I consider that?

Multimedia

Oct 24, 11:53 PM

Damn multimedia, you are making me want that Dell! I just went to the Apple store to check out the 30" (pulled a stool up to the machine from the genius bar and tried to see if I could handle all that real estate). I am usually a sucker for Apple stuff and having matching componentry...but that dell is so CHEAP!

AV/multimedia, how far do you sit from your screen?Mine are up against the wall at the back of 3 foot deep tables. I have an L table setup with a 6x3 and 8x3 for a total of 9x8 so I'm usually about 3-4 feet away.

Yes the Dells are low priced (http://accessories.us.dell.com/sna/productlisting.aspx?c=us&category_id=6198&cs=19&l=en&s=dhs) but they do not look nor feel cheap in any way. I really prefer the black or dark brown frames Dell uses. In the dark the screens float in the dark and the frames do not reflect anything from the screens like the Apple Aluminum frames must. Plus they all have 4-port USB 2 hubs in them and 9-type memory card readers. And they all have elevators so you can adjust the height which Apple's do not. I love the new x07 model Dell Stands which differ from the x05 model stands. And they are all VESA mount compatible as well. I'm gonna get another Dell 30" next Spring or as soon as they hit $999 which ever comes first - watch out Black Friday - November 24. :p

The popularity of 30" monitors has got to be going through the roof right now with these ever rapidly lowering prices happening. I can really see the end of all CRTs now.

AV/multimedia, how far do you sit from your screen?Mine are up against the wall at the back of 3 foot deep tables. I have an L table setup with a 6x3 and 8x3 for a total of 9x8 so I'm usually about 3-4 feet away.

Yes the Dells are low priced (http://accessories.us.dell.com/sna/productlisting.aspx?c=us&category_id=6198&cs=19&l=en&s=dhs) but they do not look nor feel cheap in any way. I really prefer the black or dark brown frames Dell uses. In the dark the screens float in the dark and the frames do not reflect anything from the screens like the Apple Aluminum frames must. Plus they all have 4-port USB 2 hubs in them and 9-type memory card readers. And they all have elevators so you can adjust the height which Apple's do not. I love the new x07 model Dell Stands which differ from the x05 model stands. And they are all VESA mount compatible as well. I'm gonna get another Dell 30" next Spring or as soon as they hit $999 which ever comes first - watch out Black Friday - November 24. :p

The popularity of 30" monitors has got to be going through the roof right now with these ever rapidly lowering prices happening. I can really see the end of all CRTs now.

unlinked

Apr 9, 03:58 PM

Why would I do that?

People who have issues with uncontracted negative questions have been known to display a wide range of linguistic disorders.

People who have issues with uncontracted negative questions have been known to display a wide range of linguistic disorders.

gugy

Sep 20, 01:38 PM

The iTV makes the elgato eyetv hybrid even more appealing. :)

http://www.elgato.com/index.php?file=products_eyetvhybridna

Use it to record your shows and then stream it to the iTV.

-bye bye comcast DVR.

what about calling it the iStream (ha)

yeah, that looks cool.

I am seriously considering buying one. Plus get an external antena and get HDTV. sweet.:D

But I would still keeping my dishnetwork DVR. I think it will take time to completely get rid off any cable/dvr package.

The fact that the computer has to be on everytime I want to watch or record a show is somewhat a hassle.

http://www.elgato.com/index.php?file=products_eyetvhybridna

Use it to record your shows and then stream it to the iTV.

-bye bye comcast DVR.

what about calling it the iStream (ha)

yeah, that looks cool.

I am seriously considering buying one. Plus get an external antena and get HDTV. sweet.:D

But I would still keeping my dishnetwork DVR. I think it will take time to completely get rid off any cable/dvr package.

The fact that the computer has to be on everytime I want to watch or record a show is somewhat a hassle.

iJohnHenry

Mar 14, 04:34 PM

Does a partial melt-down equate with being a little bit pregnant?

of course things could still go South, but hopefully they won't

Inscrutable cat says

of course things could still go South, but hopefully they won't

Inscrutable cat says

ACED

Mar 18, 04:15 PM

Like, where's my credit for providing Macrumors with the link/story, about 8 hours ago???

Guess that 'DRM' has been stripped....hmmm...the irony

Guess that 'DRM' has been stripped....hmmm...the irony

mac jones

Mar 12, 03:58 AM

Hey, I've been hanging out on the forum for the iPad. But frankly i'm a little confused right now about what i just saw. From appearances (I mean appearances), the nuke plant in Japan BLEW UP, and they are lying about it if they say it's a minor issue. I don't want to believe this . You can see it with your own eyes, but i'm not sure exactly what i'm seeing. Certainly it isn't a small explosion.

Until I know what's really happening I'm officially, totally, freaked out......Any takers? :D

Until I know what's really happening I'm officially, totally, freaked out......Any takers? :D

bugfaceuk

Apr 10, 07:00 AM

Brilliant! then a family of five can all play scrabble or monopoly for the low low cost of $1,495*

*listed price includes iDevices only. Apple tv required to play. Apple tv, monopoly and scrabble sold separately.

Anyone who buys iOS devices to play Scrabble is an idiot. People who uses their existing iOS devices to play together have a lot of fun.

*listed price includes iDevices only. Apple tv required to play. Apple tv, monopoly and scrabble sold separately.

Anyone who buys iOS devices to play Scrabble is an idiot. People who uses their existing iOS devices to play together have a lot of fun.

Shivetya

Apr 28, 12:29 PM

Its not like the market for $1000+ computers is inexhaustible. They had to throw in tablets while they can to maintain market position because once the cheap tablets start coming out (and they will, it took a while for notebooks to get cheap and look at where they are now).

Multimedia

Oct 20, 12:59 PM

Now to pre-arrange for the 8-core Mac Pro's arrival next month. :)

I'm now working with

Two 20" - 1600 x 1200 Dells

One 24" - 1920 x 1200 Dell

One 30" - 2560 x 1600 Dell

Two 15" - 1024 x 768 Original 15" Analog Bondai Blue Apple Studio Displays

2 PowerMac G5's Quad, 2GHz Dual Core + 1 Old 1.25GHz PowerBook G4

2 G4 Cubes

for a total of 9 cores totaling 16.2GHz. :p

Original retail cost of all of the above about $13,000

New 8-Core Mac Pro @ 2.66GHz each totaling 21.28GHz for about $4,000

I'm now working with

Two 20" - 1600 x 1200 Dells

One 24" - 1920 x 1200 Dell

One 30" - 2560 x 1600 Dell

Two 15" - 1024 x 768 Original 15" Analog Bondai Blue Apple Studio Displays

2 PowerMac G5's Quad, 2GHz Dual Core + 1 Old 1.25GHz PowerBook G4

2 G4 Cubes

for a total of 9 cores totaling 16.2GHz. :p

Original retail cost of all of the above about $13,000

New 8-Core Mac Pro @ 2.66GHz each totaling 21.28GHz for about $4,000

flopticalcube

Apr 15, 01:16 PM

Ok, replace "True" for "Orthodox". Mainstream Protestant, Roman Catholic, Eastern Orthodox, Greek Orthodox. Pretty much believe the same things. You can even throw some non-orthodox sects in there like the Mormons and still have a huge intersect on beliefs, especially on morality.

Except for the fastest growing contingent of Christians in the world, the evangelicals. Like I said, you are all finger pointing and being smug in your own belief as to the true interpretation. How laughable. If you are all true Christians, why is there more than one church?

Except for the fastest growing contingent of Christians in the world, the evangelicals. Like I said, you are all finger pointing and being smug in your own belief as to the true interpretation. How laughable. If you are all true Christians, why is there more than one church?

0 comments:

Post a Comment